In classrooms across the United States, a quiet but profound technological revolution is unfolding as generative artificial intelligence becomes an inescapable part of the educational landscape, yet this transformation is happening largely without a map. A significant “policy vacuum” at the federal and state levels has effectively left the nation’s approximately 13,000 school districts to navigate the complex ethical, practical, and pedagogical challenges of AI on their own. This hands-off approach is fostering a fragmented patchwork of local rules and experiments, leading to vast inconsistencies in how students are being prepared for a future where interaction with AI is not optional but essential. Without cohesive, top-down directives, the responsibility for building the guardrails for this powerful technology has fallen squarely on the shoulders of local educators and administrators, many of whom are struggling to keep pace with its rapid evolution. This decentralization threatens to deepen existing inequities and leaves a generation of students with wildly different levels of preparation for the AI-driven world that awaits them.

The Policy Void and Its Consequences

The Double-Edged Sword of “Local Control”

The primary driver behind this policy gap is the deeply embedded American tradition of “local control” in education, a principle that has historically empowered communities to tailor schooling to their specific needs. However, this model is proving ill-suited for a globally transformative and rapidly evolving technology like artificial intelligence. While some state boards of education have begun to offer guidance, tool kits, and recommendations, very few have issued mandates that would require local districts to create specific AI policies. This deference to local autonomy means that the ultimate decisions—ranging from enthusiastic district-wide adoption to outright classroom bans—are made in isolation. Consequently, thousands of school districts are being forced to reinvent the wheel, individually tackling complex issues of data privacy, academic integrity, and ethical use without the benefit of a standardized framework, leading to a chaotic and inefficient approach to a systemic challenge.

The absence of clear directives has inadvertently fueled the rise of “shadow use,” a phenomenon where teachers and staff independently adopt generative AI tools for their work without explicit approval or oversight from their school’s IT and security departments. This trend is not a fringe activity; a February 2025 Gallup Poll revealed that a staggering 60% of K-12 teachers are already using AI in some capacity in their professional lives. While many educators are leveraging these tools to save time on lesson planning and administrative tasks, this unmanaged implementation creates significant vulnerabilities. Without a formal vetting process, schools risk exposing sensitive student data to third-party applications with questionable privacy policies. Furthermore, this ad-hoc adoption contributes to a growing disconnect between official school policy and actual classroom practice, undermining efforts to create a consistent, equitable, and secure instructional environment for all students.

A Landscape of Growing Inequity

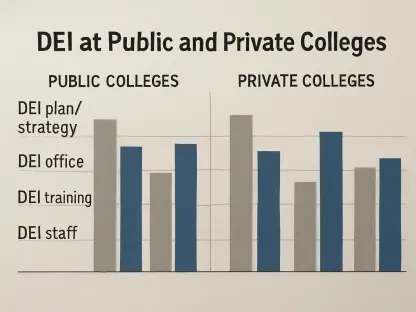

The decentralized, district-by-district approach to AI policymaking is not just inefficient; it is actively exacerbating the deep-seated inequities that already plague the American education system. A stark divide is emerging between well-resourced school districts and their underfunded counterparts, creating a new and formidable dimension to the digital divide. Data from a RAND-led panel highlights this disparity, revealing that teachers and principals in higher-poverty schools are approximately half as likely to receive official guidance on the use of AI compared to their peers in wealthier districts. This lack of support and resources means that students in disadvantaged communities are far less likely to gain exposure to AI tools in a structured, educational setting. As a result, they are being denied the opportunity to develop the critical AI literacy skills that are increasingly essential for academic success and future employment, further widening the opportunity gap.

This resource gap translates directly into a two-tiered system of AI education. Wealthier districts have the capacity to invest in professional development for teachers, purchase subscriptions to vetted and secure AI platforms, and dedicate administrative time to crafting thoughtful, comprehensive policies. In contrast, under-resourced schools are often left with a difficult choice: either implement a blanket ban on AI tools, which can stifle innovation and leave students unprepared, or permit their use without the necessary guardrails, potentially exposing students and the institution to significant risks. This disparity ensures that a student’s ability to learn with and about AI is determined more by their zip code than by their potential. As AI becomes more integrated into society, this inequitable foundation for AI education threatens to create long-term disadvantages for students who are already the most vulnerable.

Navigating the Uncharted Territory of AI

Universal Fears Meet Localized Solutions

Despite the lack of a unified national strategy, education policymakers from coast to coast share a consistent and growing set of concerns regarding the proliferation of generative AI in schools. Topping the list are perennial issues like student safety and the protection of sensitive data, which take on new urgency when third-party AI tools are involved. There is also widespread apprehension that an over-reliance on AI could undermine the development of fundamental skills, such as critical thinking, analytical reasoning, and effective writing. Beyond the classroom, policymakers are wary of the outsized influence wielded by technology companies, fearing that tools currently offered for free will inevitably become costly subscription services that strain already tight school budgets. More novel threats have also emerged, including the malicious use of deepfake technology, with officials citing plausible fears of a student using a fabricated principal’s voice to issue a bomb threat or cancel school.

While these fears are universally recognized, the solutions being developed to address them remain highly localized and inconsistent. In the absence of state or federal standards, each district is tasked with creating its own guardrails for everything from academic integrity to data protection. This has resulted in a chaotic regulatory landscape where the rules governing AI use can vary dramatically from one town to the next. A student in one district might be encouraged to use an AI chatbot as a collaborative learning partner, while a student just a few miles away could face disciplinary action for the very same behavior. This lack of standardization creates confusion for students, parents, and educators alike. It also places an enormous burden on teachers who may work in one district but live in another, forcing them to navigate a conflicting and often contradictory set of expectations for what constitutes ethical and appropriate use of AI technology.

The Shift from Prohibition to Preparation

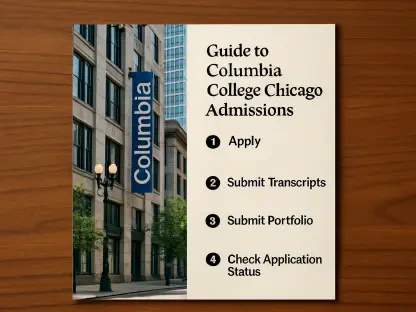

A broad consensus had emerged among education stakeholders that simply banning artificial intelligence was not a viable or sustainable long-term strategy. The prevailing sentiment was that the “horse has already left the barn,” and attempts to prohibit the technology would only drive its use underground, making it impossible to guide or monitor. Consequently, the conversation had shifted decisively away from prohibition and toward preparation. The new focus centered on a more proactive stance: teaching students how to use AI tools critically, ethically, and effectively. The ultimate objective was to foster a relationship of human-AI collaboration, one that would not only prepare students for the realities of the modern workforce but also empower them to leverage AI as a powerful tool for learning and innovation, rather than viewing it as a shortcut or a threat to academic integrity.

To navigate this complex transition, many school systems and states had initiated pilot programs and other experimental adoption models. These initiatives were seen as crucial for understanding the practical challenges and opportunities of integrating AI into the curriculum. For instance, states like Indiana began funding competitive grants for schools to test state-approved AI platforms, allowing for controlled exploration. Such trials brought critical issues to the forefront, including the discovery of algorithmic bias in AI-generated feedback on student writing, reinforcing the need for hands-on experience to understand the technology’s limitations. It became clear that a future path forward required states to assume a “lighthouse” role, providing coherent guidance on ethics, equity, and safety. This approach was intended to prevent thousands of districts from operating in isolation and to ensure that local efforts culminated in beneficial and equitable outcomes for every student.