Imagine a classroom where technology personalizes learning for every student, adapting lessons to individual needs in real time, while teachers focus on mentoring rather than administrative tasks, turning a once-distant dream into reality with the advent of artificial intelligence (AI) in education. Across preschool to higher education (PreK-20) settings, AI promises to revolutionize how knowledge is imparted and absorbed. However, with great potential comes significant responsibility. This review delves into a pioneering framework developed by researchers at the University of Kansas (KU), designed to guide the ethical and effective integration of AI in educational environments. Known for its human-centered approach, this framework offers a blueprint for harnessing AI’s capabilities while safeguarding core educational values.

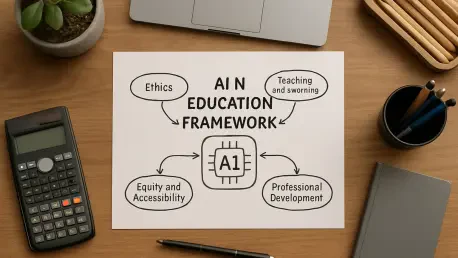

Core Features of the KU Framework

Building a Human-Centered Foundation

At the heart of this framework lies a commitment to prioritizing human judgment over automated processes. AI tools, while powerful, are positioned as supportive aids rather than decision-makers, especially in critical areas such as student assessments or behavioral interventions. Transparency stands as a key pillar, ensuring that educators and stakeholders understand how AI systems operate and arrive at recommendations. Compliance with legal standards, including the Individuals with Disabilities Education Act (IDEA) and the Family Educational Rights and Privacy Act (FERPA), is non-negotiable, protecting student rights and privacy in every application.

This emphasis on human oversight addresses a fundamental concern: the risk of depersonalizing education. By mandating that AI serves merely as an assistant, the framework preserves the essential teacher-student relationship. It also sets clear boundaries, preventing over-reliance on technology in scenarios where empathy and nuanced understanding are paramount, such as counseling or conflict resolution.

Strategic Planning for AI Adoption

Another critical feature is the call for meticulous, forward-thinking planning before AI integration. The framework advocates for the formation of diverse task forces comprising educators, administrators, families, and technology specialists to oversee implementation. These groups are tasked with conducting thorough audits and risk assessments to identify potential pitfalls, including the perpetuation of algorithmic biases that could unfairly impact certain student demographics.

Beyond risk mitigation, strategic planning ensures that AI tools align with the specific missions and needs of educational institutions. This tailored approach prevents a one-size-fits-all deployment, recognizing that a rural elementary school may have different priorities compared to an urban university. Such customization is vital for maximizing the technology’s benefits while minimizing disruptions to existing systems.

Inclusivity in Educational AI Deployment

Inclusivity forms a cornerstone of the framework, ensuring that AI serves all learners, regardless of ability or background. The guidelines explicitly prohibit AI from making final determinations on sensitive matters like Individualized Education Program (IEP) eligibility or disciplinary actions, reserving such decisions for human educators. This safeguard prevents potential inequities that could arise from automated judgments lacking context or cultural sensitivity.

Moreover, the framework encourages continuous feedback from students, teachers, and parents to refine AI applications. This participatory approach ensures that tools are responsive to real-world experiences, addressing disparities in access or outcomes. By focusing on learner-centered design, the framework aims to bridge gaps rather than widen them, fostering an equitable educational landscape.

Continuous Evaluation and Educator Training

Recognizing the fast-evolving nature of AI, the framework stresses the importance of ongoing evaluation to detect unintended consequences or inefficiencies in deployed tools. Regular assessments help identify whether systems are meeting intended goals or inadvertently causing harm, such as reinforcing stereotypes through biased data inputs. This iterative process is crucial for maintaining relevance and effectiveness over time.

Equally important is the provision for professional development. Educators receive both initial training and sustained learning opportunities to understand AI’s capabilities and limitations. Community engagement is also prioritized, building trust and ensuring that all stakeholders are informed about the role of technology in education, thus preventing misuse or misplaced expectations.

Performance and Real-World Impact

The practical applications of this framework demonstrate its versatility across diverse educational settings. In classrooms, AI tools guided by these principles can offer personalized learning supports, adapting content delivery to match a student’s pace or style. Administrative efficiencies, such as automated scheduling or resource allocation, free up valuable time for educators to focus on teaching rather than paperwork, enhancing overall productivity.

Case studies from various districts reveal the framework’s adaptability. In some schools, AI assists with early identification of learning challenges, providing data-driven insights while leaving final interventions to teachers. In higher education, it streamlines processes like grading or course recommendations, yet maintains human oversight to ensure fairness. These examples underscore the framework’s ability to balance innovation with ethical considerations, tailoring solutions to unique institutional needs.

What sets this framework apart is its proactive stance on community involvement. By integrating stakeholder feedback into AI deployment, it ensures that technology remains a servant of educational goals rather than a detached force. This collaborative spirit enhances acceptance and effectiveness, proving that AI can indeed complement human efforts when guided by robust principles.

Emerging Trends Shaping AI in Education

Current trends in educational AI align closely with the framework’s vision, moving away from viewing technology as a standalone solution. Instead, there is a growing recognition of AI as a complementary tool that enhances human interaction, not replaces it. Ethical considerations are gaining prominence, with increased scrutiny on data privacy and the potential for bias in automated systems, reflecting broader societal concerns about technology’s role.

Transparency has also become a focal point, as educators and families demand clarity on how AI influences learning environments. The dynamic nature of AI technology further necessitates adaptability, pushing institutions to invest in continuous learning for staff and students alike. These trends reinforce the framework’s relevance, positioning it as a timely guide for navigating an ever-changing landscape.

Challenges and Limitations

Despite its strengths, implementing AI under this framework is not without hurdles. Ethical dilemmas, such as ensuring fairness in AI-driven recommendations, remain a significant challenge, particularly when systems are trained on historical data that may embed existing prejudices. Privacy concerns, governed by laws like FERPA, require constant vigilance to prevent breaches or misuse of sensitive information.

Technical barriers also pose obstacles. Not all educational institutions possess the infrastructure or funding to adopt AI tools effectively, risking a digital divide between well-resourced and underfunded schools. Additionally, there is the danger of over-reliance on technology, where educators might defer too readily to AI outputs, undermining their own expertise. Addressing these issues demands ongoing support and resources, a commitment the framework acknowledges through its emphasis on evaluation and training.

Final Thoughts and Next Steps

Reflecting on this review, the KU framework for responsible AI integration in education proves to be a thoughtful and comprehensive guide. Its human-centered principles, strategic focus, and commitment to inclusivity provide a robust foundation for leveraging technology without compromising educational integrity. The real-world applications showcase its practical value, while the attention to challenges highlights a realistic understanding of AI’s complexities.

Looking ahead, educational leaders should prioritize building diverse task forces to tailor AI adoption to local needs, ensuring no community is left behind. Investing in educator training must remain a key focus, equipping staff with the skills to use AI as a partner rather than a crutch. Finally, fostering open dialogue with students and families will be essential to maintain trust, ensuring that AI serves as a bridge to better learning outcomes for all. These steps offer a clear path to realizing the transformative potential of AI in education.